The architecture chameleon - Lambda or Container with same code

At the beginning of a AWS software development project, some big architectural decisions need to be made. This is a classic: does AWS Lambda or Docker/Microservice make more sense?

Well - with the GOpher Chameleon, you can have both worlds. The very same code for Lambda or container.

The quest

Assume, you write a todo app. Not that we do not have enough, but its just a nice simple example problem to look at.

Now you want to create a REST API with a few services like ping and todos.

Thinking about architecture: Is serverless Lambda the better choice?

What if the app needs to be deployed in a k8s cluster?

No problem with GO, GIN and the Ginadapter!

The old way: separate approaches

A GO Lambda

For introduction, see a simple GO Lambda.

1 package main

2

3 import (

4 "fmt"

5 "context"

6 "github.com/aws/aws-lambda-go/lambda"

7 )

8

9 type MyEvent struct {

10 Name string `json:"name"`

11 }

12

13 func HandleRequest(ctx context.Context, name MyEvent) (string, error) {

14 return fmt.Sprintf("Hiho %s!", name.Name ), nil

15 }

16

17 func main() {

18 lambda.Start(HandleRequest)

19 }

With a normal Lambda, the app directly serves the request.

Calling the Lambda function with this event:

{

"name": "megaproaktiv"

}

Line 14 Returns: Hiho megaproaktiv!

A GO Service

When you write a server which listens to request, that is a totally different architecture. One possibility to do that with go is the “Gin Web Framework”.

An example from the gin documentation:

1 package main

2

3 import (

4 "net/http"

5

6 "github.com/gin-gonic/gin"

7 )

8

9 func main() {

10 router := gin.Default()

11

12 // This handler will match /user/john but will not match /user/ or /user

13 router.GET("/user/:name", func(c *gin.Context) {

14 name := c.Param("name")

15 c.String(http.StatusOK, "Hello %s", name)

16 })

17

18 // However, this one will match /user/john/ and also /user/john/send

19 // If no other routers match /user/john, it will redirect to /user/john/

20 router.GET("/user/:name/*action", func(c *gin.Context) {

21 name := c.Param("name")

22 action := c.Param("action")

23 message := name + " is " + action

24 c.String(http.StatusOK, message)

25 })

26

27 router.Run(":8080")

28 }

You can query the running server:

curl http://localhost:8080/user/gernot

Hello gernot

Line 15 takes the name “gernot” from the path and send it as an answer.

A new Way: One approach for both

The Ginadapter

The magic lies in the AWS provided adapter: github.com/awslabs/aws-lambda-go-api-proxy

Usually the Lambda handler in the app directly serves the incoming event. The lambda handler uses the adapter. So you can use GIN “Syntax”, but you have no running server, but each request is served by Lambda.

func Handler(ctx context.Context, req events.APIGatewayProxyRequest) (events.APIGatewayProxyResponse, error) {

// If no name is provided in the HTTP request body, throw an error

return ginLambda.ProxyWithContext(ctx, req)

}

Where am I?

To detect, whether the APP runs on Lambda or local the app just looks for the environment variable AWS_LAMBDA_RUNTIME_API.

It is present as part of the Lambda environment, but not running locally or in a container.

if runtime_api, _ := os.LookupEnv("AWS_LAMBDA_RUNTIME_API"); runtime_api != "" {

log.Println("Starting up in Lambda Runtime")

lambda.Start(Handler)

} else {

log.Println("Starting up on own")

router.Run() // listen and serve on 0.0.0.0:8080

}

See the code here.

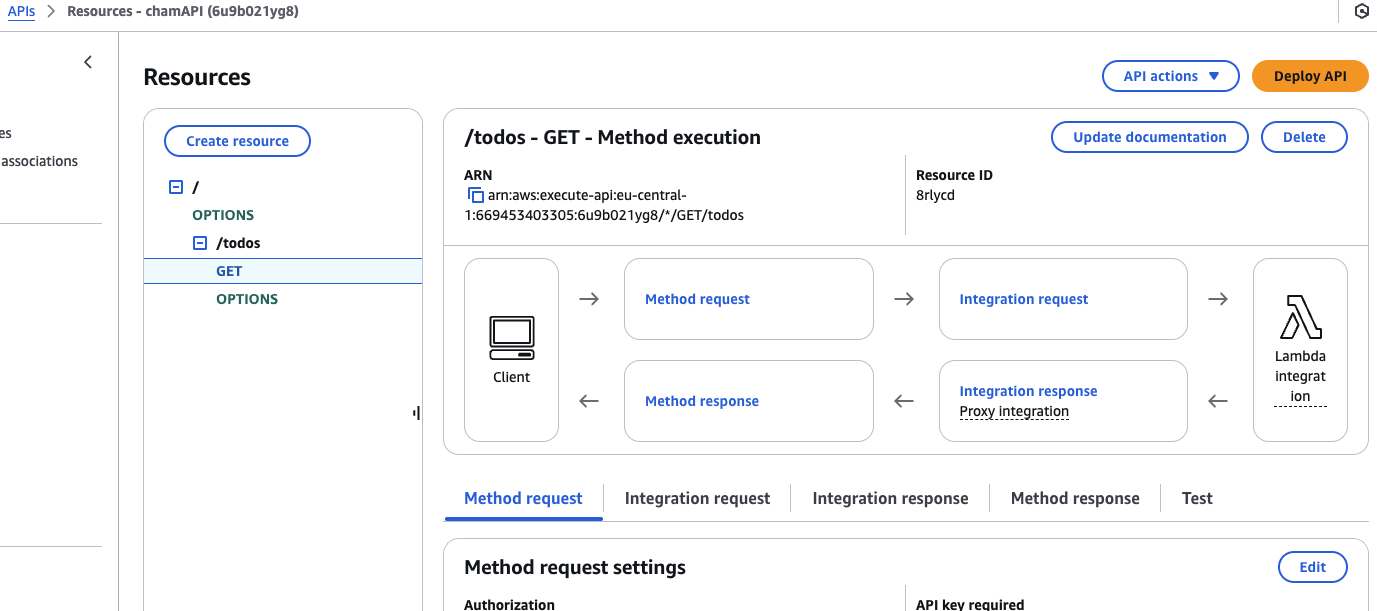

Simple API GW created with CDK

I have prepared a fully functiona demo for you…

With CDK we create a Lambda and a simpler API Gateway:

The code is in the infra directory of the repository.

const api = new apigateway.LambdaRestApi(this, "chamAPI", {

handler: cham,

proxy: false,

defaultCorsPreflightOptions: {

allowOrigins: apigateway.Cors.ALL_ORIGINS,

allowMethods: ["OPTIONS", "GET", "POST", "PUT", "PATCH", "DELETE"],

allowHeaders: ["Content-Type", "Authorization", "X-Amz-Date", "X-Api-Key", "X-Amz-Security-Token", "X-Amz-User-Agent"],

allowCredentials: true,

},

});

const todos = api.root.addResource("todos");

todos.addMethod("GET", new apigateway.LambdaIntegration(cham));

This creates a full API Gateway:

See the code here.

How to run CDK: AWS CDK CLI reference

Running locally

The very same Lambda code runs locally on your laptop without any AWS SAM or lambci help.

In the app directory of the repository you can start the server with task run.

You just need to install taskfile and go.

task run

task: [run] go run main.go

2025/05/04 18:11:16 Gin cold start

[GIN-debug] [WARNING] Creating an Engine instance with the Logger and Recovery middleware already attached.

[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.

- using env: export GIN_MODE=release

- using code: gin.SetMode(gin.ReleaseMode)

[GIN-debug] GET /ping --> main.init.0.func1 (3 handlers)

[GIN-debug] GET /todos --> cham/todo.List (3 handlers)

2025/05/04 18:11:16 Starting up on own

[GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value.

Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details.

[GIN-debug] Environment variable PORT is undefined. Using port :8080 by default

[GIN-debug] Listening and serving HTTP on :8080

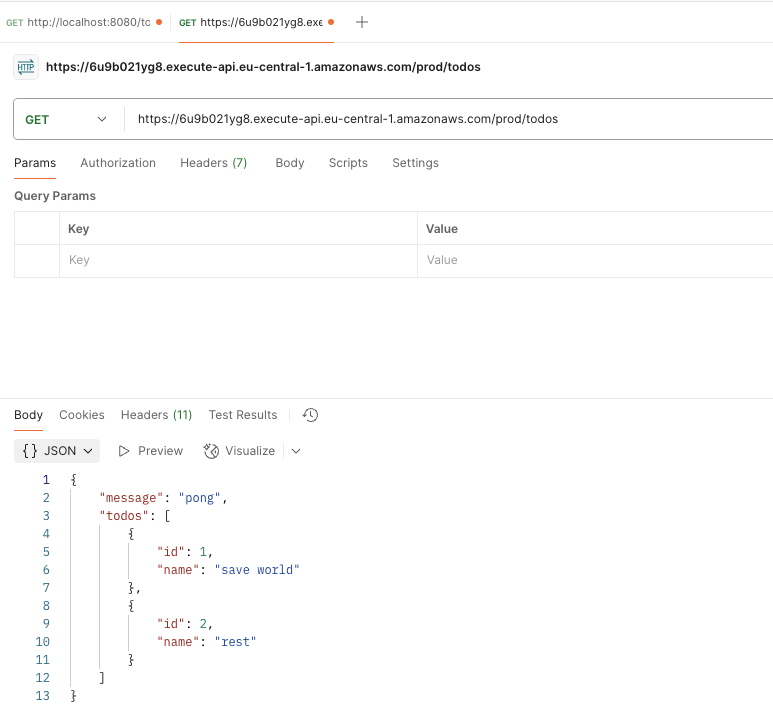

You can call the local server running on localhost:8080 with postman:

Running API GW

With deployed CDK (cdk deploy in infra), you can also query the API Gateway.

The path /todos is called with the API GW url prepended.

The request calls the List function, no matter if local or online :

func List(c *gin.Context) {

c.JSON(200, gin.H{

"message": "pong",

"todos": []map[string]any{

{"id": 1, "name": "save world"},

{"id": 2, "name": "rest"},

}})

}

This is routed (see main) from the path via:

router.GET("/todos", todo.List)

More on API Gateway here.

Local

When you run the local app, it shows you the port it is listening (Listening and serving HTTP on :8080):

task run

task: [run] go run main.go

2025/05/04 17:46:48 Gin cold start

[GIN-debug] [WARNING] Creating an Engine instance with the Logger and Recovery middleware already attached.

[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.

- using env: export GIN_MODE=release

- using code: gin.SetMode(gin.ReleaseMode)

[GIN-debug] GET /ping --> main.init.0.func1 (3 handlers)

[GIN-debug] GET /todos --> cham/todo.List (3 handlers)

2025/05/04 17:46:48 Starting up on own

[GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value.

Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details.

[GIN-debug] Environment variable PORT is undefined. Using port :8080 by default

[GIN-debug] Listening and serving HTTP on :8080

[GIN] 2025/05/04 - 17:46:53 | 200 | 150µs | ::1 | GET "/todos"

Docker -local

With a few lines in a Dockerfile you start the server as a container:

task docker-run

task: [docker-run] docker run -p 8080:8080 go-app

[GIN-debug] [WARNING] Creating an Engine instance with the Logger and Recovery middleware already attached.

[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.

- using env: export GIN_MODE=release

- using code: gin.SetMode(gin.ReleaseMode)

[GIN-debug] GET /ping --> main.init.0.func1 (3 handlers)

[GIN-debug] GET /todos --> cham/todo.List (3 handlers)

[GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value.

Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details.

[GIN-debug] Environment variable PORT is undefined. Using port :8080 by default

[GIN-debug] Listening and serving HTTP on :8080

2025/05/04 16:04:09 Gin cold start

2025/05/04 16:04:09 Starting up on own

[GIN] 2025/05/04 - 16:04:16 | 200 | 214.879µs | 172.17.0.1 | GET "/todos"

Does not work? Try task docker-build before :) .

Dockerfile

First part is installing packages and building the app:

# Stage 1: Build

FROM golang:1.20-alpine AS builder

# Install necessary build tools

RUN apk add --no-cache git

# Set the working directory inside the container

WORKDIR /app

# Copy go.mod and go.sum files

COPY go.mod go.sum ./

# Download dependencies

RUN go mod download

# Copy the rest of the application source code

COPY . .

# Build the Go application

RUN go build -o main .

In the second part we take the binary and run it. This creates smaller images with the COPY --from=builder /app/main . part.

# Stage 2: Run

FROM alpine:latest

# Install necessary runtime dependencies

RUN apk add --no-cache ca-certificates

# Set the working directory inside the container

WORKDIR /root/

# Copy the built binary from the builder stage

COPY --from=builder /app/main .

# Expose the port the app runs on

EXPOSE 8080

# Command to run the application

CMD ["./main"]

Results

The Ginadapter has been around a few years. Now I worked with it the first time and have to say: It makes development a lot simpler. Just running the server locally, test with postman, or debug with VSCode is fun to do.

In projects we often discuss the architecture with the customers. And not all people are serverless fanboys. So it happens that we start serverless and after some time in the project, ECS or kubernetes is the new architecture.

With the chameleon approach: No Problem!

What’s next?

If you need developers and consulting to support your decision in your next GenAI project, don’t hesitate to contact us, tecRacer.

Maybe want to work with us? Look at Cloud Consultant – AWS Serverless Development .

For more AWS development stuff, follow me on dev https://dev.to/megaproaktiv. Want to learn GO on AWS? GO here

Enjoy building!

Thanks to

Foto from Hasmik Ghazaryan Olson / Unsplash